💭Picture you’re a detective in a thrilling crime drama, and you stumbled upon a secret vault filled with invaluable data. These data hold the key to solving the most complex mysteries in your city.

In the world of digital marketing, the Google Search Console (GSC) API is that secret vault, and Python is your trusted partner-in-crime-solving.

Just like in your favourite TV programs, where the detective relies on a set of tools and a reliable sidekick, in the world of SEO and data analytics, you’ll find that Python and the GSC API make the perfect duo for uncovering hidden insights and optimizing your website’s performance.

In this blog post, we’ll take you on a journey through the world of GSC data extraction using Python, showing you how to extract thousands of rows of data, find out which pages & queries have won & lost in clicks and impressions and make informed decisions.

Let’s get started 🚀

What is Google Search Console (GSC) API?

The GSC API is a programming interface provided by Google, which allows SEOs & developers to programmatically access the data from the Google Search Console. You can connect the GSC API with Python, extract the performance data, and store it wherever you want.

Cool isn’t it? But what is more crazy is this 👇

From the GSC user interface that we all use, we can only view and export 1000 rows of data, which is pretty decent for a small website. But if you work with enterprise websites, then this data won’t cut it.

Solution? Yes, you guessed it right. It’s the GSC API. With the API, you can extract 25000+ rows of data with any level of customization you want.

But the GSC API also has some usage limits, which you can read here.

What can you do with GSC API?

✔️ Extract traffic data for your site.

✔️ Retrieve, submit, or delete the sitemap.

✔️ Add, retrieve, or delete sites.

✔️ Get URL inspection data.

✔️ Create advanced reports.

Prerequisites for using GSC API

✔️ You need to have a Google account. (😉Obviously)

✔️ Your Google account should be linked with the GSC property.

✔️ Fair bit of knowledge about programming concepts.

If you do not have any programming experience, don’t worry. You can always use ChatGPT to explain the code if you are stuck somewhere. Imagine how far we have come from searching for answers in Stackoverflow. Amazing, isn’t it?

Next step? It is to actually work with the API itself.

How to use GSC API with Python to get winners & losers data?

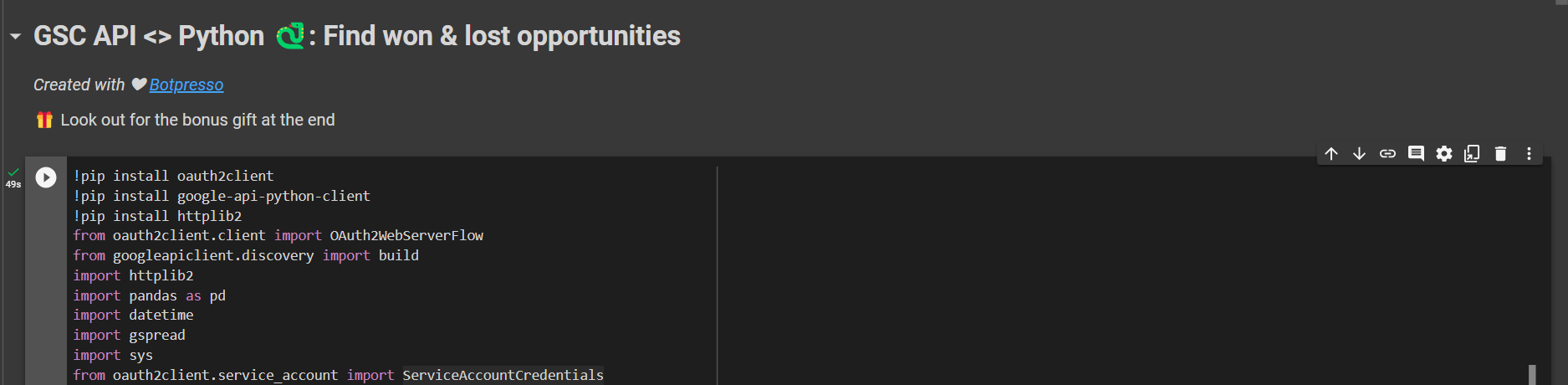

You can connect with the GSC API using Python and request the data you need from your search console property. To do that, you have to follow the steps mentioned below: (don’t worry if this is too much for you, we have something for you at the end of this section 🙂)

Step #1: Create a cloud console account.

Step #2: Create a new project or an existing one.

Step #3: Enable the GSC API from the API library.

Step #4: Create the OAuth consent screen.

Step #5: Create credentials for authentication.

Step #6: Enable Google Sheets & Google Drive API to get data into sheets.

Step #7: Generate JSON key for accessing Google Sheets API.

Step #8: Create a Google sheet and share access to the client email ID.

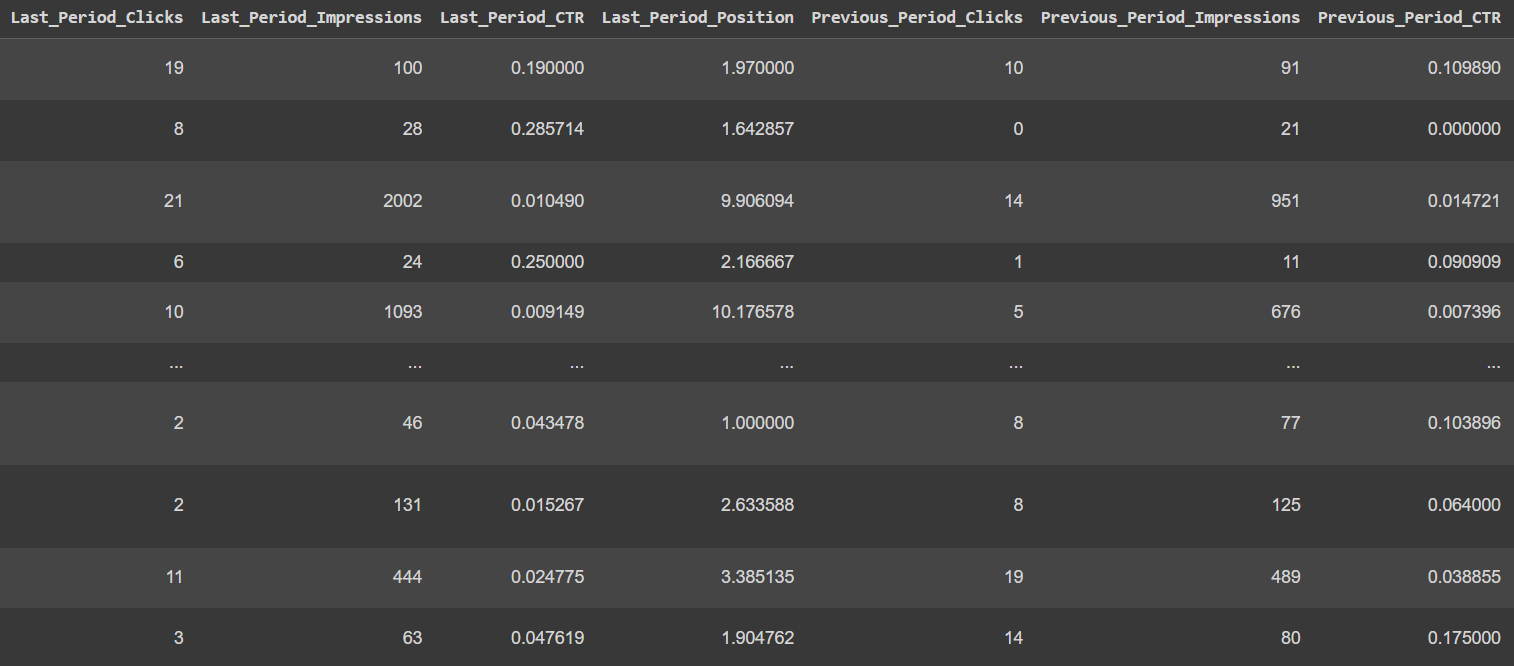

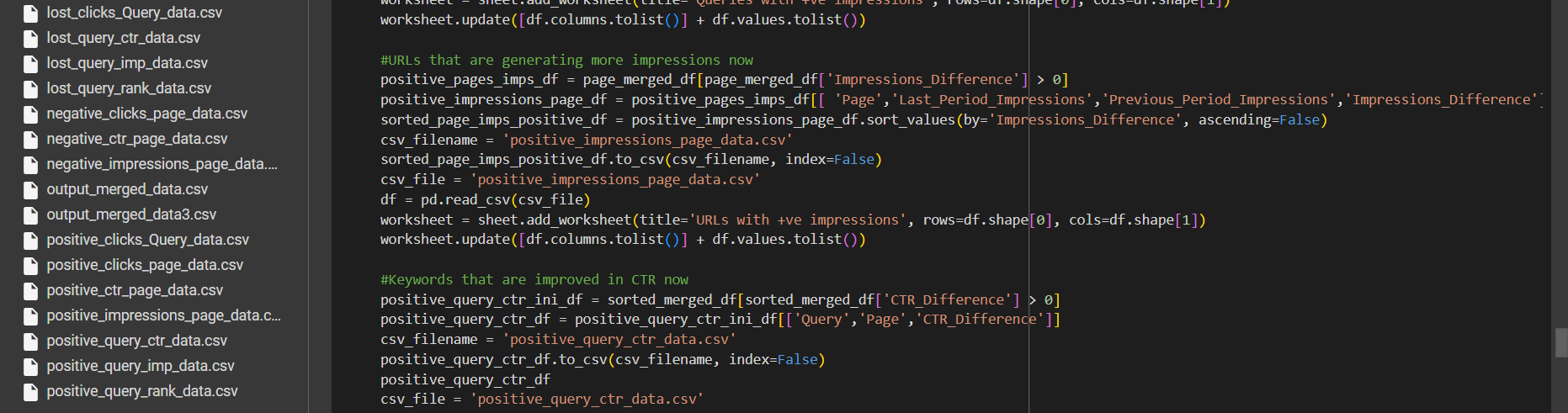

Step #9: Write a Python script that would authenticate & access the Google search console API, fetch data from the GSC property, compare it with previous timeframe, and outputs the following data,

– Keywords and URLs generate more clicks and impressions.

– Improved CTR and Avg. Rankings.

– Keywords and URLs losing clicks and impressions.

– Declined CTR and Avg. Rankings.

Sounds a bit overwhelming? No worries, we got you covered.

We have created a complete Python script that will help you fetch 25000+ rows of data from any GSC property you have access to, find out which pages and queries have won and lost in a given timeframe, and export the data to CSV files & Google Sheets.

And yes, we have created detailed step by step documentation on how to do that too.

Pretty amazing, right? Here are few screenshots of our script & the output👇

Damn, that’s a lot of data within a few clicks. Want to speed up the process of fetching the ‘REAL’ data from GSC and finding the opportunities to grow your project? Just sign up here and you will get this script directly to your inbox.

And just like that, you’d have 25000+ rows of data at your fingertips to gather valuable insights. So what are you waiting for?

Get started and let us know your experience by sharing your outcomes on LinkedIn by tagging our business page and our hashtag #GSCAPIwithBotpresso.

You can also contact us on X (Twitter) if you face any hurdles while running the script.

👋See ya on another interesting SEO automation post.