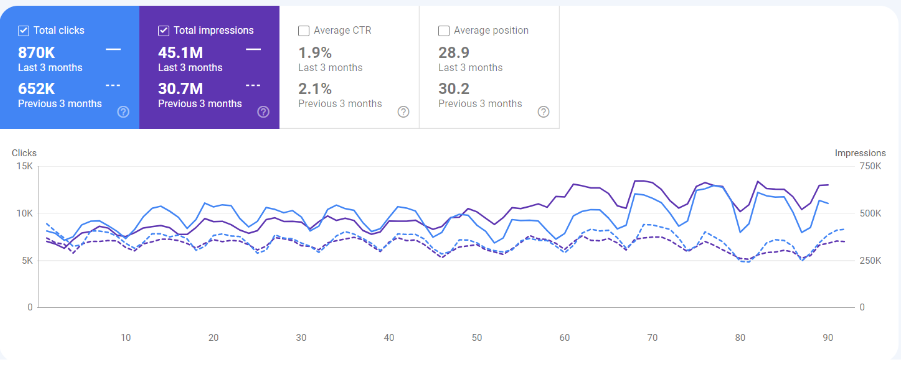

In this SaaS SEO Case Study, you will learn how we increased organic clicks by 133.4% in just 3 months.

Figuring out how to take your SaaS website to the next level?

The answer isn’t always more backlinks or content, but rather something as simple as fixing what’s broken. Working on the foundation rather than the rooftop by first building the foundation upon which the rooftop can thrive.

In this case study, we explored the untapped Technical SEO opportunities for one of our clients to grow organic traffic by 133.4% in 3 Months.

Challenge

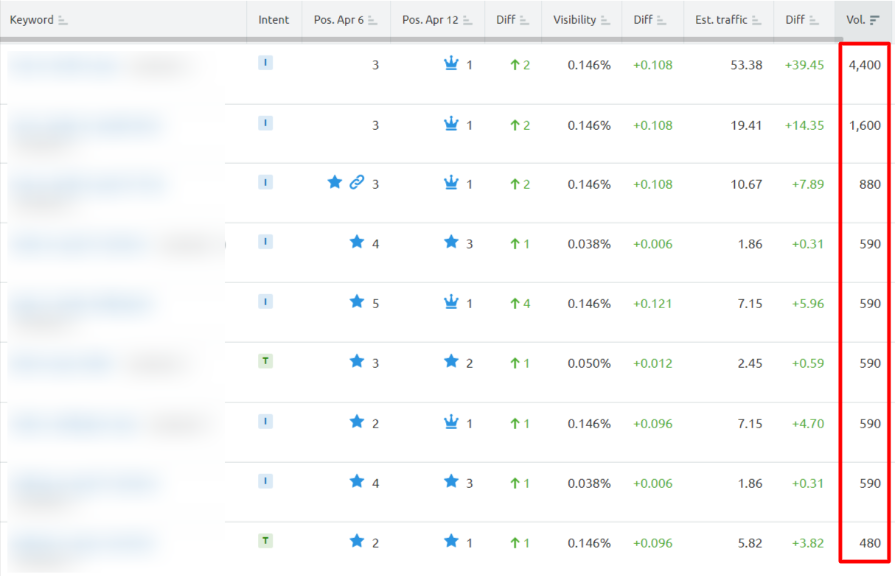

Capture an important keyword cluster directly proportional to business revenue. New competitors had dominated this space, and reclaiming the top spot was the goal.

Opportunities we discovered

- Duplicate Meta Tags due to technical issues

- Schema Optimization

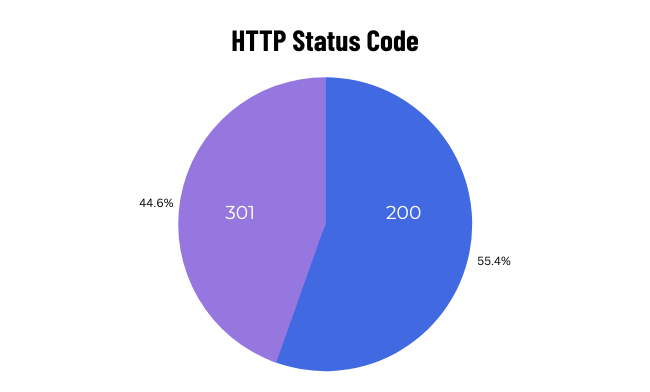

- Only 55.3% of URLs with HTTP 200 Status Code

- A Crawl Depth of >40 was discovered

Yeah, I know.

This sounds a little too much, but allow me to simplify it. Let’s dive right in and surgically understand the process. Are you excited?

Hold your response to the end.

Technical SEO could be the final key needed to unlock the doors of organic traffic.

1. Duplicate Meta Tags Issues

The website had massive duplicate meta tags issues. The technical problem occurred because paginated pages were indexable. Paginated pages essentially add no value despite being indexed on SERP.

Don’t get me wrong.

Pagination is a clever way of distributing content across pages and users may even click those pages to discover the content. However, on SERP they bear the same meta tags and context as they did on the level 1 page.

Consequently, issues like keyword cannibalization, duplicate content cluster, and index bloat arise.

Aiming to improve the bot experience on the site, we non-indexed and followed all the paginated pages encountered.

Pagination means big trouble for big sites, especially for a site with 50,300 pages indexed on SERP.

Paginated pages on SERP add no value. All the preceding level pages in pagination carry the same meta tags thus exacerbating keyword cannibalization incidences.

To prevent this we added a non-index, and follow to all the paginated pages. This helped us with 2 things:

A] Preserve the precious crawl budget

B] Prevent the occurrence of keyword cannibalization

As a result, we managed to get 99.85% unique meta tags.

How does this help?

The amount of perceived duplicate content that SE bots would infer dramatically lessens. Issues like keyword cannibalization and index bloat are minimized to a great extent. This helps us with crawl budget optimization as well.

Instead of crawling paginated pages with similar content in terms of excerpts, Google would rather crawl the pages with more value and should remain fresher in SERPs.

2. Schema Optimization

Entities in SEO are all the rage. One thing that influences and signifies entities is the Schema code that we add to the web page that can make or break your SEO.

Over the homepage, we generated a detailed Organization Schema referencing various important properties like products, Founder E-A-T, and what their business deals in with KnowsAbout property.

Additionally, lots of pages were ineligible to use SoftwareApplication Schema but were using it, so we cleaned it to reference the right context on the right page.

Just to set the context, SoftwareApplication schema is used on an App page that is either available on mobile devices or desktop devices as WebApp.

But if SoftwareApplication schema is being used on other pages like blog posts, and FAQ content pages amongst many others; this sends mixed signals to Google about the content.

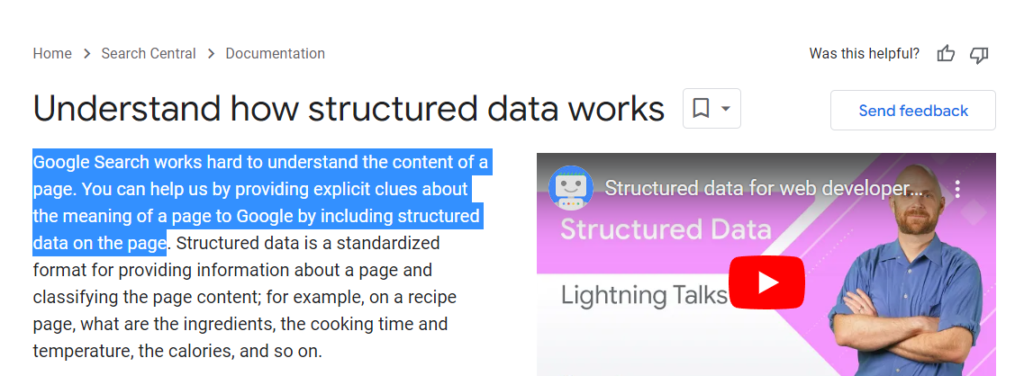

Google Webmaster Guidelines explicitly mention that Google uses the Schema code to understand the content of the page.

3. Only 55.3% of URLs were 200 Status Code Pages

This was a massive issue.

It explains the amount of non-indexable internal linking that had taken place on the website.

HTTP Status code that SE bots come across while crawling impacts the experience with a site. On a very basic level, the 3 popular HTTP Status Codes are:

2XX – The page is working and is available on the destination where it is hosted

3XX – The page’s Location is changed either permanently or temporarily

4XX – 404 Status code means that the page is broken.

Ideally, SE bots would have a good experience if most of their precious time is spent crawling the 200 status code pages that carry content valuable to the users.

To quantify 18.8K 3XX status code pages were receiving 57.8K internal links.

This was a massive waste of Google Bot’s crawl budget.

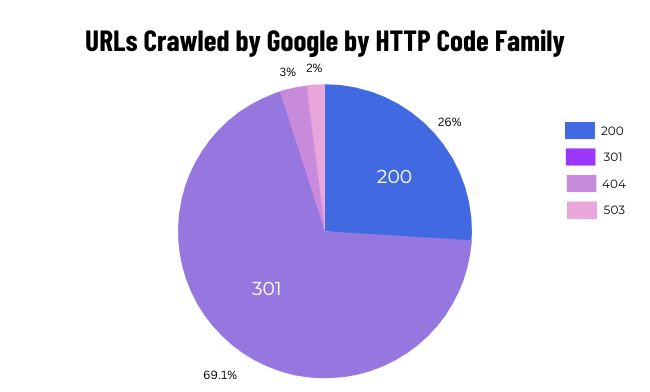

In fact, 69.4% of the pages that Google Bot crawled were actually non-indexable and this says a thing or two about how G bot perceives the quality of our website.

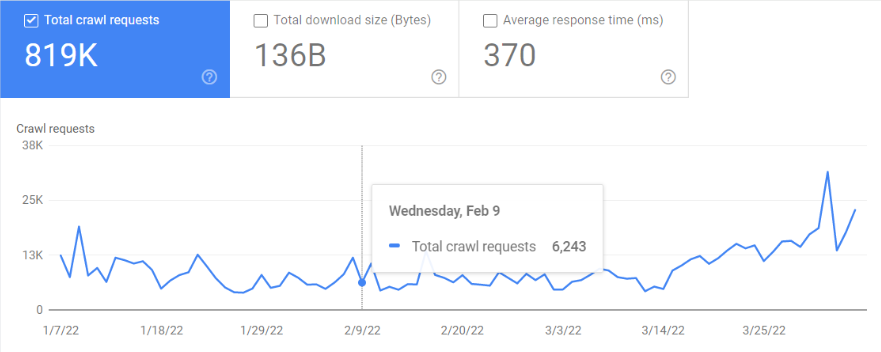

After getting the issue fixed, we did see a visible improvement in the number of crawl requests received from Google Bot every day.

We also observed a massive increase in the number of indexed pages.

4. Crawl depth of >40 was discovered

Crawl Depth/Click Depth is how deep under the pages SE bots have to go to access the page which signifies the importance attached to a page on our website.

So if a high revenue-generating page with massive search volume potential is buried at a crawl depth of 14; it implies though we know this is an important page for the business, the SE bots infer that this page mustn’t be that important and as a result, the organic visibility and rankings of the page may suffer.

Ideally, crawl depth should not be greater than 5, but 40?

It’s a massive number to look at, and this was definitely a thing we aimed to fix by strengthening the overall internal linking structure.

Important keyword cluster tapped into the featured snippet position.

Key Takeaways

- SEO is UXO

- Keep working on improving the bot experience on the website

- Never stop monitoring the HTTP Status codes

- When working on Schema understand the signals sent to Google and find ways to have clear communication with SE bots via Schema code